"Harinder, How are our services performing? Anything out of the ordinary I need to be aware of?"

Background

One of my previous roles involved establishing a Performance Engineering capability for a service domain. The domain had numerous squads responsible for developing different service functionality. Additionally, each one had its own operating model.

During my early days, I was the only one responsible for running performance tests and advising squads on performance optimization opportunities. I was also responsible for updating management on our progress in developing the capability and improving service performance. In general, the domain structure resembled the following.

Context

After I joined the organization, I noticed a few patterns which suggested a need for a different approach if we were to take performance testing and engineering seriously. These are some of the patterns I noticed.

Also, every week, I was required to attend a meeting with our domain's Head to provide updates. In this meeting, the Head would always ask me, "Harinder, How are our services performing? Anything out of the ordinary that I need to be aware of?". And I would assure him that no noteworthy performance or stability issues warrant immediate attention. He would take my words at face value. He trusted my view. It was great, but this approach will not be sustainable in the long run. There was an opportunity to give him visibility into service performance and have data for better discussion and decision-making. This led me to develop "PerfOps Framework" for the domain.

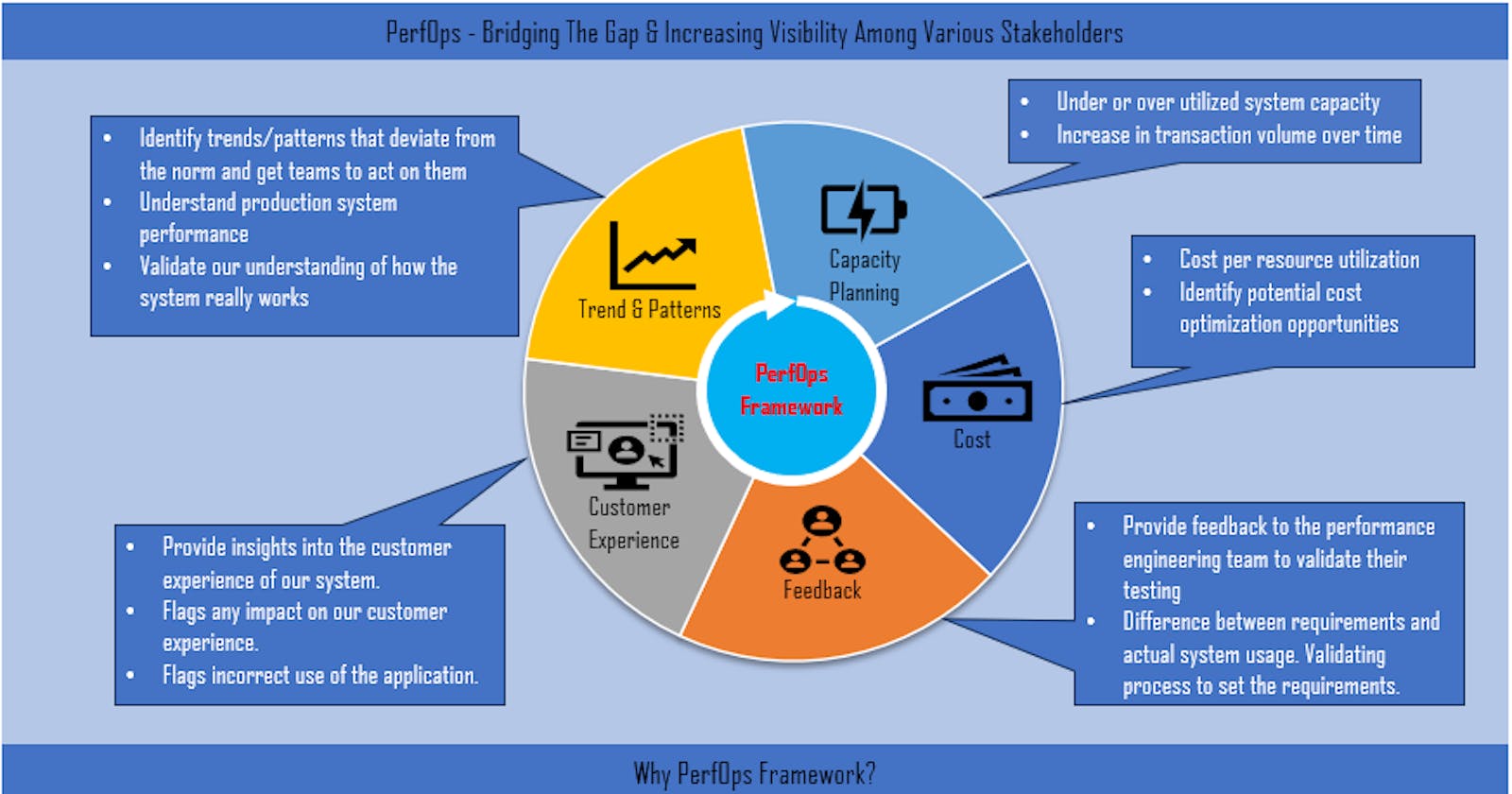

PerfOps Framework

We used PerfOps framework as a vehicle for:

Visibility - Depending on the maturity level, there was a different level of visibility across teams and leadership when it came to application performance. At the end-to-end application level, there was hardly any visibility.

Reporting - Different stakeholders required different levels of reporting. We needed to develop a framework that standardizes and tailors the reporting across different reporting structures. For example, Developers and leads had different requirements compared to management.

Collaboration - It was building and fostering a culture of shared responsibility, where everyone in the domain is accountable for their service operational success and the success of the domain. This area was an eye open for the squads, especially regarding the operational requirements and how disjoined the collaboration was.

Accountability - The PerfOps framework was crucial in ensuring shared accountability across the domain, similar to collaboration. All squads were interdependent, relying on services provided by each other, and integrated within the same domain. Hence, a shared accountability approach was necessary. However, managing operational requirements posed a challenge, and it took time for the squads to adjust to this accountability approach, just like with collaboration.

Optimization - Having the ability to view and report on different levels was absolutely crucial in aligning everyone towards optimizing the application. The productive conversations among stakeholders were a testament to the effectiveness of this approach, enabling them to express their concerns and highlight the importance of application optimization (& cost reduction) to upper management. This was a great opportunity for everyone involved, and it served as a catalyst for the reprioritization of work across the different teams and domains. An opportunity for a developer to raise their concern with head of domain.

This is not an exhaustive list but here is a snippet of areas that were focused in the PerfOps framework:

Trends & Patterns

Identify trends/patterns that deviate from the norm and get teams to act on them

Understand our production system performance. There were a few "Aha" moments for everyone.

Capacity Planning

Under or over-utilized system capacity

Increase in transaction volume over time

Help with capacity planning

Cost

Identify potential cost optimization opportunities

Cost per resource utilization

Customer Experience

Flags any impact on our consumer experience

Flag incorrect use of the application

Provide insights into the consumer experience of our system.

Feedback

Provide feedback to the performance engineering team to validate their testing

Difference between requirements and actual system usage. Validating process to set the requirements.

In the follow-up post, I will describe how we implemented the PerfOps Framework (see below diagram), who was involved, the challenges we encountered, and the benefits of it to all the stakeholders.

------------------------------------------------------------------------------------

Thanks for reading!

If you enjoyed this article feel free to share on social media 🙂

Say Hello on: Linkedin | Twitter | Polywork

Github repo: hseera